Upgrade minimist to 1.2.6

This CL updates minimist to 1.2.6 to fix a critical security

vulnerability: https://nvd.nist.gov/vuln/detail/CVE-2021-44906

The fix was made by running:

`npm run install-deps audit fix --audit-level=critical`

Bug: none

Change-Id: I51103b525d9969e03000d55af86cebdabc7e0366

Reviewed-on: https://chromium-review.googlesource.com/c/devtools/devtools-frontend/+/3591079

Reviewed-by: Mathias Bynens <mathias@chromium.org>

Commit-Queue: Brandon Walderman <brwalder@microsoft.com>

diff --git a/node_modules/.package-lock.json b/node_modules/.package-lock.json

index cd00758..e2571b2 100644

--- a/node_modules/.package-lock.json

+++ b/node_modules/.package-lock.json

@@ -537,6 +537,15 @@

"integrity": "sha512-+iTbntw2IZPb/anVDbypzfQa+ay64MW0Zo8aJ8gZPWMMK6/OubMVb6lUPMagqjOPnmtauXnFCACVl3O7ogjeqQ==",

"dev": true

},

+ "node_modules/@socket.io/base64-arraybuffer": {

+ "version": "1.0.2",

+ "resolved": "https://registry.npmjs.org/@socket.io/base64-arraybuffer/-/base64-arraybuffer-1.0.2.tgz",

+ "integrity": "sha512-dOlCBKnDw4iShaIsH/bxujKTM18+2TOAsYz+KSc11Am38H4q5Xw8Bbz97ZYdrVNM+um3p7w86Bvvmcn9q+5+eQ==",

+ "dev": true,

+ "engines": {

+ "node": ">= 0.6.0"

+ }

+ },

"node_modules/@trysound/sax": {

"version": "0.2.0",

"resolved": "https://registry.npmjs.org/@trysound/sax/-/sax-0.2.0.tgz",

@@ -804,6 +813,16 @@

"integrity": "sha512-sPMaZm2M7ZBaVnajY1WFszcl+6CR/daMt77dD8PpMH1wRKinRMDV40U55VmObJ58Oehu7K2JxmSonkm8BlbfxA==",

"dev": true

},

+ "node_modules/@types/yauzl": {

+ "version": "2.9.1",

+ "resolved": "https://registry.npmjs.org/@types/yauzl/-/yauzl-2.9.1.tgz",

+ "integrity": "sha512-A1b8SU4D10uoPjwb0lnHmmu8wZhR9d+9o2PKBQT2jU5YPTKsxac6M2qGAdY7VcL+dHHhARVUDmeg0rOrcd9EjA==",

+ "dev": true,

+ "optional": true,

+ "dependencies": {

+ "@types/node": "*"

+ }

+ },

"node_modules/@typescript-eslint/eslint-plugin": {

"version": "5.20.0",

"resolved": "https://registry.npmjs.org/@typescript-eslint/eslint-plugin/-/eslint-plugin-5.20.0.tgz",

@@ -1294,15 +1313,6 @@

"integrity": "sha512-3oSeUO0TMV67hN1AmbXsK4yaqU7tjiHlbxRDZOpH0KW9+CeX4bRAaX0Anxt0tx2MrpRpWwQaPwIlISEJhYU5Pw==",

"dev": true

},

- "node_modules/base64-arraybuffer": {

- "version": "1.0.1",

- "resolved": "https://registry.npmjs.org/base64-arraybuffer/-/base64-arraybuffer-1.0.1.tgz",

- "integrity": "sha512-vFIUq7FdLtjZMhATwDul5RZWv2jpXQ09Pd6jcVEOvIsqCWTRFD/ONHNfyOS8dA/Ippi5dsIgpyKWKZaAKZltbA==",

- "dev": true,

- "engines": {

- "node": ">= 0.6.0"

- }

- },

"node_modules/base64-js": {

"version": "1.5.1",

"resolved": "https://registry.npmjs.org/base64-js/-/base64-js-1.5.1.tgz",

@@ -1984,9 +1994,9 @@

"dev": true

},

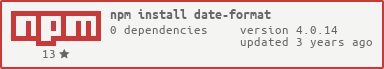

"node_modules/date-format": {

- "version": "3.0.0",

- "resolved": "https://registry.npmjs.org/date-format/-/date-format-3.0.0.tgz",

- "integrity": "sha512-eyTcpKOcamdhWJXj56DpQMo1ylSQpcGtGKXcU0Tb97+K56/CF5amAqqqNj0+KvA0iw2ynxtHWFsPDSClCxe48w==",

+ "version": "4.0.7",

+ "resolved": "https://registry.npmjs.org/date-format/-/date-format-4.0.7.tgz",

+ "integrity": "sha512-k5xqlzDGIfv2N/DHR/BR8Kc4N9CRy9ReuDkmdxeX/jNfit94QXd36emWMm40ZOEDKNm/c91yV9EO3uGPkR7wWQ==",

"dev": true,

"engines": {

"node": ">=4.0"

@@ -2209,9 +2219,9 @@

}

},

"node_modules/engine.io": {

- "version": "6.1.0",

- "resolved": "https://registry.npmjs.org/engine.io/-/engine.io-6.1.0.tgz",

- "integrity": "sha512-ErhZOVu2xweCjEfYcTdkCnEYUiZgkAcBBAhW4jbIvNG8SLU3orAqoJCiytZjYF7eTpVmmCrLDjLIEaPlUAs1uw==",

+ "version": "6.1.3",

+ "resolved": "https://registry.npmjs.org/engine.io/-/engine.io-6.1.3.tgz",

+ "integrity": "sha512-rqs60YwkvWTLLnfazqgZqLa/aKo+9cueVfEi/dZ8PyGyaf8TLOxj++4QMIgeG3Gn0AhrWiFXvghsoY9L9h25GA==",

"dev": true,

"dependencies": {

"@types/cookie": "^0.4.1",

@@ -2222,7 +2232,7 @@

"cookie": "~0.4.1",

"cors": "~2.8.5",

"debug": "~4.3.1",

- "engine.io-parser": "~5.0.0",

+ "engine.io-parser": "~5.0.3",

"ws": "~8.2.3"

},

"engines": {

@@ -2230,12 +2240,12 @@

}

},

"node_modules/engine.io-parser": {

- "version": "5.0.2",

- "resolved": "https://registry.npmjs.org/engine.io-parser/-/engine.io-parser-5.0.2.tgz",

- "integrity": "sha512-wuiO7qO/OEkPJSFueuATIXtrxF7/6GTbAO9QLv7nnbjwZ5tYhLm9zxvLwxstRs0dcT0KUlWTjtIOs1T86jt12g==",

+ "version": "5.0.3",

+ "resolved": "https://registry.npmjs.org/engine.io-parser/-/engine.io-parser-5.0.3.tgz",

+ "integrity": "sha512-BtQxwF27XUNnSafQLvDi0dQ8s3i6VgzSoQMJacpIcGNrlUdfHSKbgm3jmjCVvQluGzqwujQMPAoMai3oYSTurg==",

"dev": true,

"dependencies": {

- "base64-arraybuffer": "~1.0.1"

+ "@socket.io/base64-arraybuffer": "~1.0.2"

},

"engines": {

"node": ">=10.0.0"

@@ -2358,6 +2368,19 @@

"esbuild-windows-arm64": "0.14.13"

}

},

+ "node_modules/esbuild-linux-64": {

+ "version": "0.14.13",

+ "resolved": "https://registry.npmjs.org/esbuild-linux-64/-/esbuild-linux-64-0.14.13.tgz",

+ "integrity": "sha512-P6OFAfcoUvE7g9h/0UKm3qagvTovwqpCF1wbFLWe/BcCY8BS1bR/+SxUjCeKX2BcpIsg4/43ezHDE/ntg/iOpw==",

+ "cpu": [

+ "x64"

+ ],

+ "dev": true,

+ "optional": true,

+ "os": [

+ "linux"

+ ]

+ },

"node_modules/escalade": {

"version": "3.1.1",

"resolved": "https://registry.npmjs.org/escalade/-/escalade-3.1.1.tgz",

@@ -3257,25 +3280,30 @@

"node": "^10.12.0 || >=12.0.0"

}

},

- "node_modules/flat-cache/node_modules/flatted": {

+ "node_modules/flatted": {

"version": "3.2.5",

"resolved": "https://registry.npmjs.org/flatted/-/flatted-3.2.5.tgz",

"integrity": "sha512-WIWGi2L3DyTUvUrwRKgGi9TwxQMUEqPOPQBVi71R96jZXJdFskXEmf54BoZaS1kknGODoIGASGEzBUYdyMCBJg==",

"dev": true

},

- "node_modules/flatted": {

- "version": "2.0.2",

- "resolved": "https://registry.npmjs.org/flatted/-/flatted-2.0.2.tgz",

- "integrity": "sha512-r5wGx7YeOwNWNlCA0wQ86zKyDLMQr+/RB8xy74M4hTphfmjlijTSSXGuH8rnvKZnfT9i+75zmd8jcKdMR4O6jA==",

- "dev": true

- },

"node_modules/follow-redirects": {

- "version": "1.13.2",

- "resolved": "https://registry.npmjs.org/follow-redirects/-/follow-redirects-1.13.2.tgz",

- "integrity": "sha512-6mPTgLxYm3r6Bkkg0vNM0HTjfGrOEtsfbhagQvbxDEsEkpNhw582upBaoRZylzen6krEmxXJgt9Ju6HiI4O7BA==",

+ "version": "1.14.9",

+ "resolved": "https://registry.npmjs.org/follow-redirects/-/follow-redirects-1.14.9.tgz",

+ "integrity": "sha512-MQDfihBQYMcyy5dhRDJUHcw7lb2Pv/TuE6xP1vyraLukNDHKbDxDNaOE3NbCAdKQApno+GPRyo1YAp89yCjK4w==",

"dev": true,

+ "funding": [

+ {

+ "type": "individual",

+ "url": "https://github.com/sponsors/RubenVerborgh"

+ }

+ ],

"engines": {

"node": ">=4.0"

+ },

+ "peerDependenciesMeta": {

+ "debug": {

+ "optional": true

+ }

}

},

"node_modules/fs-constants": {

@@ -3285,17 +3313,17 @@

"dev": true

},

"node_modules/fs-extra": {

- "version": "8.1.0",

- "resolved": "https://registry.npmjs.org/fs-extra/-/fs-extra-8.1.0.tgz",

- "integrity": "sha512-yhlQgA6mnOJUKOsRUFsgJdQCvkKhcz8tlZG5HBQfReYZy46OwLcY+Zia0mtdHsOo9y/hP+CxMN0TU9QxoOtG4g==",

+ "version": "10.1.0",

+ "resolved": "https://registry.npmjs.org/fs-extra/-/fs-extra-10.1.0.tgz",

+ "integrity": "sha512-oRXApq54ETRj4eMiFzGnHWGy+zo5raudjuxN0b8H7s/RU2oW0Wvsx9O0ACRN/kRq9E8Vu/ReskGB5o3ji+FzHQ==",

"dev": true,

"dependencies": {

"graceful-fs": "^4.2.0",

- "jsonfile": "^4.0.0",

- "universalify": "^0.1.0"

+ "jsonfile": "^6.0.1",

+ "universalify": "^2.0.0"

},

"engines": {

- "node": ">=6 <7 || >=8"

+ "node": ">=12"

}

},

"node_modules/fs.realpath": {

@@ -4291,11 +4319,14 @@

}

},

"node_modules/jsonfile": {

- "version": "4.0.0",

- "resolved": "https://registry.npmjs.org/jsonfile/-/jsonfile-4.0.0.tgz",

- "integrity": "sha1-h3Gq4HmbZAdrdmQPygWPnBDjPss=",

+ "version": "6.1.0",

+ "resolved": "https://registry.npmjs.org/jsonfile/-/jsonfile-6.1.0.tgz",

+ "integrity": "sha512-5dgndWOriYSm5cnYaJNhalLNDKOqFwyDB/rr1E9ZsGciGvKPs8R2xYGCacuf3z6K1YKDz182fd+fY3cn3pMqXQ==",

"dev": true,

"dependencies": {

+ "universalify": "^2.0.0"

+ },

+ "optionalDependencies": {

"graceful-fs": "^4.1.6"

}

},

@@ -4665,21 +4696,38 @@

}

},

"node_modules/log4js": {

- "version": "6.3.0",

- "resolved": "https://registry.npmjs.org/log4js/-/log4js-6.3.0.tgz",

- "integrity": "sha512-Mc8jNuSFImQUIateBFwdOQcmC6Q5maU0VVvdC2R6XMb66/VnT+7WS4D/0EeNMZu1YODmJe5NIn2XftCzEocUgw==",

+ "version": "6.4.5",

+ "resolved": "https://registry.npmjs.org/log4js/-/log4js-6.4.5.tgz",

+ "integrity": "sha512-43RJcYZ7nfUxpPO2woTl8CJ0t5+gucLJZ43mtp2PlInT+LygCp/bl6hNJtKulCJ+++fQsjIv4EO3Mp611PfeLQ==",

"dev": true,

"dependencies": {

- "date-format": "^3.0.0",

- "debug": "^4.1.1",

- "flatted": "^2.0.1",

- "rfdc": "^1.1.4",

- "streamroller": "^2.2.4"

+ "date-format": "^4.0.7",

+ "debug": "^4.3.4",

+ "flatted": "^3.2.5",

+ "rfdc": "^1.3.0",

+ "streamroller": "^3.0.7"

},

"engines": {

"node": ">=8.0"

}

},

+ "node_modules/log4js/node_modules/debug": {

+ "version": "4.3.4",

+ "resolved": "https://registry.npmjs.org/debug/-/debug-4.3.4.tgz",

+ "integrity": "sha512-PRWFHuSU3eDtQJPvnNY7Jcket1j0t5OuOsFzPPzsekD52Zl8qUfFIPEiswXqIvHWGVHOgX+7G/vCNNhehwxfkQ==",

+ "dev": true,

+ "dependencies": {

+ "ms": "2.1.2"

+ },

+ "engines": {

+ "node": ">=6.0"

+ },

+ "peerDependenciesMeta": {

+ "supports-color": {

+ "optional": true

+ }

+ }

+ },

"node_modules/lower-case": {

"version": "1.1.4",

"resolved": "https://registry.npmjs.org/lower-case/-/lower-case-1.1.4.tgz",

@@ -4943,9 +4991,9 @@

}

},

"node_modules/minimist": {

- "version": "1.2.5",

- "resolved": "https://registry.npmjs.org/minimist/-/minimist-1.2.5.tgz",

- "integrity": "sha512-FM9nNUYrRBAELZQT3xeZQ7fmMOBg6nWNmJKTcgsJeaLstP/UODVpGsr5OhXhhXg6f+qtJ8uiZ+PUxkDWcgIXLw==",

+ "version": "1.2.6",

+ "resolved": "https://registry.npmjs.org/minimist/-/minimist-1.2.6.tgz",

+ "integrity": "sha512-Jsjnk4bw3YJqYzbdyBiNsPWHPfO++UGG749Cxs6peCu5Xg4nrena6OVxOYxrQTqww0Jmwt+Ref8rggumkTLz9Q==",

"dev": true

},

"node_modules/minimist-options": {

@@ -5282,9 +5330,9 @@

"dev": true

},

"node_modules/nth-check": {

- "version": "2.0.0",

- "resolved": "https://registry.npmjs.org/nth-check/-/nth-check-2.0.0.tgz",

- "integrity": "sha512-i4sc/Kj8htBrAiH1viZ0TgU8Y5XqCaV/FziYK6TBczxmeKm3AEFWqqF3195yKudrarqy7Zu80Ra5dobFjn9X/Q==",

+ "version": "2.0.1",

+ "resolved": "https://registry.npmjs.org/nth-check/-/nth-check-2.0.1.tgz",

+ "integrity": "sha512-it1vE95zF6dTT9lBsYbxvqh0Soy4SPowchj0UBGj/V6cTPnXXtQOPUbhZ6CmGzAD/rW22LQK6E96pcdJXk4A4w==",

"dev": true,

"dependencies": {

"boolbase": "^1.0.0"

@@ -5563,9 +5611,9 @@

}

},

"node_modules/path-parse": {

- "version": "1.0.6",

- "resolved": "https://registry.npmjs.org/path-parse/-/path-parse-1.0.6.tgz",

- "integrity": "sha512-GSmOT2EbHrINBf9SR7CDELwlJ8AENk3Qn7OikK4nFYAu3Ote2+JYNVvkpAEQm3/TLNEJFD/xZJjzyxg3KBWOzw==",

+ "version": "1.0.7",

+ "resolved": "https://registry.npmjs.org/path-parse/-/path-parse-1.0.7.tgz",

+ "integrity": "sha512-LDJzPVEEEPR+y48z93A0Ed0yXb8pAByGWo/k5YYdYgpY2/2EsOsksJrq7lOHxryrVOn1ejG6oAp8ahvOIQD8sw==",

"dev": true

},

"node_modules/path-to-regexp": {

@@ -6093,9 +6141,9 @@

}

},

"node_modules/rfdc": {

- "version": "1.2.0",

- "resolved": "https://registry.npmjs.org/rfdc/-/rfdc-1.2.0.tgz",

- "integrity": "sha512-ijLyszTMmUrXvjSooucVQwimGUk84eRcmCuLV8Xghe3UO85mjUtRAHRyoMM6XtyqbECaXuBWx18La3523sXINA==",

+ "version": "1.3.0",

+ "resolved": "https://registry.npmjs.org/rfdc/-/rfdc-1.3.0.tgz",

+ "integrity": "sha512-V2hovdzFbOi77/WajaSMXk2OLm+xNIeQdMMuB7icj7bk6zi2F8GGAxigcnDFpJHbNyNcgyJDiP+8nOrY5cZGrA==",

"dev": true

},

"node_modules/rimraf": {

@@ -6516,26 +6564,34 @@

}

},

"node_modules/streamroller": {

- "version": "2.2.4",

- "resolved": "https://registry.npmjs.org/streamroller/-/streamroller-2.2.4.tgz",

- "integrity": "sha512-OG79qm3AujAM9ImoqgWEY1xG4HX+Lw+yY6qZj9R1K2mhF5bEmQ849wvrb+4vt4jLMLzwXttJlQbOdPOQVRv7DQ==",

+ "version": "3.0.7",

+ "resolved": "https://registry.npmjs.org/streamroller/-/streamroller-3.0.7.tgz",

+ "integrity": "sha512-kh68kwiDGuIPiPDWwRbEC5us+kfARP1e9AsQiaLaSqGrctOvMn0mtL8iNY3r4/o5nIoYi3gPI1jexguZsXDlxw==",

"dev": true,

"dependencies": {

- "date-format": "^2.1.0",

- "debug": "^4.1.1",

- "fs-extra": "^8.1.0"

+ "date-format": "^4.0.7",

+ "debug": "^4.3.4",

+ "fs-extra": "^10.0.1"

},

"engines": {

"node": ">=8.0"

}

},

- "node_modules/streamroller/node_modules/date-format": {

- "version": "2.1.0",

- "resolved": "https://registry.npmjs.org/date-format/-/date-format-2.1.0.tgz",

- "integrity": "sha512-bYQuGLeFxhkxNOF3rcMtiZxvCBAquGzZm6oWA1oZ0g2THUzivaRhv8uOhdr19LmoobSOLoIAxeUK2RdbM8IFTA==",

+ "node_modules/streamroller/node_modules/debug": {

+ "version": "4.3.4",

+ "resolved": "https://registry.npmjs.org/debug/-/debug-4.3.4.tgz",

+ "integrity": "sha512-PRWFHuSU3eDtQJPvnNY7Jcket1j0t5OuOsFzPPzsekD52Zl8qUfFIPEiswXqIvHWGVHOgX+7G/vCNNhehwxfkQ==",

"dev": true,

+ "dependencies": {

+ "ms": "2.1.2"

+ },

"engines": {

- "node": ">=4.0"

+ "node": ">=6.0"

+ },

+ "peerDependenciesMeta": {

+ "supports-color": {

+ "optional": true

+ }

}

},

"node_modules/string_decoder": {

@@ -7201,12 +7257,12 @@

}

},

"node_modules/universalify": {

- "version": "0.1.2",

- "resolved": "https://registry.npmjs.org/universalify/-/universalify-0.1.2.tgz",

- "integrity": "sha512-rBJeI5CXAlmy1pV+617WB9J63U6XcazHHF2f2dbJix4XzpUF0RS3Zbj0FGIOCAva5P/d/GBOYaACQ1w+0azUkg==",

+ "version": "2.0.0",

+ "resolved": "https://registry.npmjs.org/universalify/-/universalify-2.0.0.tgz",

+ "integrity": "sha512-hAZsKq7Yy11Zu1DE0OzWjw7nnLZmJZYTDZZyEFHZdUhV8FkH5MCfoU1XMaxXovpyW5nq5scPqq0ZDP9Zyl04oQ==",

"dev": true,

"engines": {

- "node": ">= 4.0.0"

+ "node": ">= 10.0.0"

}

},

"node_modules/unpipe": {

diff --git a/node_modules/base64-arraybuffer/LICENSE b/node_modules/@socket.io/base64-arraybuffer/LICENSE

similarity index 100%

rename from node_modules/base64-arraybuffer/LICENSE

rename to node_modules/@socket.io/base64-arraybuffer/LICENSE

diff --git a/node_modules/base64-arraybuffer/README.md b/node_modules/@socket.io/base64-arraybuffer/README.md

similarity index 93%

rename from node_modules/base64-arraybuffer/README.md

rename to node_modules/@socket.io/base64-arraybuffer/README.md

index 4d6ae71..fd718f1 100644

--- a/node_modules/base64-arraybuffer/README.md

+++ b/node_modules/@socket.io/base64-arraybuffer/README.md

@@ -4,6 +4,8 @@

[](https://www.npmjs.org/package/base64-arraybuffer)

[](https://www.npmjs.org/package/base64-arraybuffer)

+Forked from https://github.com/niklasvh/base64-arraybuffer

+

Encode/decode base64 data into ArrayBuffers

### Installing

diff --git a/node_modules/base64-arraybuffer/dist/base64-arraybuffer.es5.js b/node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.es5.js

similarity index 96%

rename from node_modules/base64-arraybuffer/dist/base64-arraybuffer.es5.js

rename to node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.es5.js

index 285857c..4346610 100644

--- a/node_modules/base64-arraybuffer/dist/base64-arraybuffer.es5.js

+++ b/node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.es5.js

@@ -1,6 +1,6 @@

/*

* base64-arraybuffer 1.0.1 <https://github.com/niklasvh/base64-arraybuffer>

- * Copyright (c) 2021 Niklas von Hertzen <https://hertzen.com>

+ * Copyright (c) 2022 Niklas von Hertzen <https://hertzen.com>

* Released under MIT License

*/

var chars = 'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/';

diff --git a/node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.es5.js.map b/node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.es5.js.map

new file mode 100644

index 0000000..b34fd27

--- /dev/null

+++ b/node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.es5.js.map

@@ -0,0 +1 @@

+{"version":3,"file":"base64-arraybuffer.es5.js","sources":["../src/index.ts"],"sourcesContent":[null],"names":[],"mappings":";;;;;AAAA,IAAM,KAAK,GAAG,kEAAkE,CAAC;AAEjF;AACA,IAAM,MAAM,GAAG,OAAO,UAAU,KAAK,WAAW,GAAG,EAAE,GAAG,IAAI,UAAU,CAAC,GAAG,CAAC,CAAC;AAC5E,KAAK,IAAI,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,KAAK,CAAC,MAAM,EAAE,CAAC,EAAE,EAAE;IACnC,MAAM,CAAC,KAAK,CAAC,UAAU,CAAC,CAAC,CAAC,CAAC,GAAG,CAAC,CAAC;CACnC;IAEY,MAAM,GAAG,UAAC,WAAwB;IAC3C,IAAI,KAAK,GAAG,IAAI,UAAU,CAAC,WAAW,CAAC,EACnC,CAAC,EACD,GAAG,GAAG,KAAK,CAAC,MAAM,EAClB,MAAM,GAAG,EAAE,CAAC;IAEhB,KAAK,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,GAAG,EAAE,CAAC,IAAI,CAAC,EAAE;QACzB,MAAM,IAAI,KAAK,CAAC,KAAK,CAAC,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC;QAC/B,MAAM,IAAI,KAAK,CAAC,CAAC,CAAC,KAAK,CAAC,CAAC,CAAC,GAAG,CAAC,KAAK,CAAC,KAAK,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC,CAAC;QAC7D,MAAM,IAAI,KAAK,CAAC,CAAC,CAAC,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,GAAG,EAAE,KAAK,CAAC,KAAK,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC,CAAC;QAClE,MAAM,IAAI,KAAK,CAAC,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,GAAG,EAAE,CAAC,CAAC;KACtC;IAED,IAAI,GAAG,GAAG,CAAC,KAAK,CAAC,EAAE;QACf,MAAM,GAAG,MAAM,CAAC,SAAS,CAAC,CAAC,EAAE,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,GAAG,GAAG,CAAC;KACzD;SAAM,IAAI,GAAG,GAAG,CAAC,KAAK,CAAC,EAAE;QACtB,MAAM,GAAG,MAAM,CAAC,SAAS,CAAC,CAAC,EAAE,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,GAAG,IAAI,CAAC;KAC1D;IAED,OAAO,MAAM,CAAC;AAClB,EAAE;IAEW,MAAM,GAAG,UAAC,MAAc;IACjC,IAAI,YAAY,GAAG,MAAM,CAAC,MAAM,GAAG,IAAI,EACnC,GAAG,GAAG,MAAM,CAAC,MAAM,EACnB,CAAC,EACD,CAAC,GAAG,CAAC,EACL,QAAQ,EACR,QAAQ,EACR,QAAQ,EACR,QAAQ,CAAC;IAEb,IAAI,MAAM,CAAC,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,KAAK,GAAG,EAAE;QACnC,YAAY,EAAE,CAAC;QACf,IAAI,MAAM,CAAC,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,KAAK,GAAG,EAAE;YACnC,YAAY,EAAE,CAAC;SAClB;KACJ;IAED,IAAM,WAAW,GAAG,IAAI,WAAW,CAAC,YAAY,CAAC,EAC7C,KAAK,GAAG,IAAI,UAAU,CAAC,WAAW,CAAC,CAAC;IAExC,KAAK,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,GAAG,EAAE,CAAC,IAAI,CAAC,EAAE;QACzB,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,CAAC,CAAC,CAAC;QACxC,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;QAC5C,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;QAC5C,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;QAE5C,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,QAAQ,IAAI,CAAC,KAAK,QAAQ,IAAI,CAAC,CAAC,CAAC;QAC/C,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,CAAC,QAAQ,GAAG,EAAE,KAAK,CAAC,KAAK,QAAQ,IAAI,CAAC,CAAC,CAAC;QACtD,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,CAAC,QAAQ,GAAG,CAAC,KAAK,CAAC,KAAK,QAAQ,GAAG,EAAE,CAAC,CAAC;KACxD;IAED,OAAO,WAAW,CAAC;AACvB;;;;"}

\ No newline at end of file

diff --git a/node_modules/base64-arraybuffer/dist/base64-arraybuffer.umd.js b/node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.umd.js

similarity index 97%

rename from node_modules/base64-arraybuffer/dist/base64-arraybuffer.umd.js

rename to node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.umd.js

index 7fa6e0b..fd873c2 100644

--- a/node_modules/base64-arraybuffer/dist/base64-arraybuffer.umd.js

+++ b/node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.umd.js

@@ -1,6 +1,6 @@

/*

* base64-arraybuffer 1.0.1 <https://github.com/niklasvh/base64-arraybuffer>

- * Copyright (c) 2021 Niklas von Hertzen <https://hertzen.com>

+ * Copyright (c) 2022 Niklas von Hertzen <https://hertzen.com>

* Released under MIT License

*/

(function (global, factory) {

diff --git a/node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.umd.js.map b/node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.umd.js.map

new file mode 100644

index 0000000..05a3cc0

--- /dev/null

+++ b/node_modules/@socket.io/base64-arraybuffer/dist/base64-arraybuffer.umd.js.map

@@ -0,0 +1 @@

+{"version":3,"file":"base64-arraybuffer.umd.js","sources":["../src/index.ts"],"sourcesContent":[null],"names":[],"mappings":";;;;;;;;;;;IAAA,IAAM,KAAK,GAAG,kEAAkE,CAAC;IAEjF;IACA,IAAM,MAAM,GAAG,OAAO,UAAU,KAAK,WAAW,GAAG,EAAE,GAAG,IAAI,UAAU,CAAC,GAAG,CAAC,CAAC;IAC5E,KAAK,IAAI,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,KAAK,CAAC,MAAM,EAAE,CAAC,EAAE,EAAE;QACnC,MAAM,CAAC,KAAK,CAAC,UAAU,CAAC,CAAC,CAAC,CAAC,GAAG,CAAC,CAAC;KACnC;QAEY,MAAM,GAAG,UAAC,WAAwB;QAC3C,IAAI,KAAK,GAAG,IAAI,UAAU,CAAC,WAAW,CAAC,EACnC,CAAC,EACD,GAAG,GAAG,KAAK,CAAC,MAAM,EAClB,MAAM,GAAG,EAAE,CAAC;QAEhB,KAAK,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,GAAG,EAAE,CAAC,IAAI,CAAC,EAAE;YACzB,MAAM,IAAI,KAAK,CAAC,KAAK,CAAC,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC;YAC/B,MAAM,IAAI,KAAK,CAAC,CAAC,CAAC,KAAK,CAAC,CAAC,CAAC,GAAG,CAAC,KAAK,CAAC,KAAK,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC,CAAC;YAC7D,MAAM,IAAI,KAAK,CAAC,CAAC,CAAC,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,GAAG,EAAE,KAAK,CAAC,KAAK,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC,CAAC;YAClE,MAAM,IAAI,KAAK,CAAC,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,GAAG,EAAE,CAAC,CAAC;SACtC;QAED,IAAI,GAAG,GAAG,CAAC,KAAK,CAAC,EAAE;YACf,MAAM,GAAG,MAAM,CAAC,SAAS,CAAC,CAAC,EAAE,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,GAAG,GAAG,CAAC;SACzD;aAAM,IAAI,GAAG,GAAG,CAAC,KAAK,CAAC,EAAE;YACtB,MAAM,GAAG,MAAM,CAAC,SAAS,CAAC,CAAC,EAAE,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,GAAG,IAAI,CAAC;SAC1D;QAED,OAAO,MAAM,CAAC;IAClB,EAAE;QAEW,MAAM,GAAG,UAAC,MAAc;QACjC,IAAI,YAAY,GAAG,MAAM,CAAC,MAAM,GAAG,IAAI,EACnC,GAAG,GAAG,MAAM,CAAC,MAAM,EACnB,CAAC,EACD,CAAC,GAAG,CAAC,EACL,QAAQ,EACR,QAAQ,EACR,QAAQ,EACR,QAAQ,CAAC;QAEb,IAAI,MAAM,CAAC,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,KAAK,GAAG,EAAE;YACnC,YAAY,EAAE,CAAC;YACf,IAAI,MAAM,CAAC,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,KAAK,GAAG,EAAE;gBACnC,YAAY,EAAE,CAAC;aAClB;SACJ;QAED,IAAM,WAAW,GAAG,IAAI,WAAW,CAAC,YAAY,CAAC,EAC7C,KAAK,GAAG,IAAI,UAAU,CAAC,WAAW,CAAC,CAAC;QAExC,KAAK,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,GAAG,EAAE,CAAC,IAAI,CAAC,EAAE;YACzB,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,CAAC,CAAC,CAAC;YACxC,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;YAC5C,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;YAC5C,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;YAE5C,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,QAAQ,IAAI,CAAC,KAAK,QAAQ,IAAI,CAAC,CAAC,CAAC;YAC/C,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,CAAC,QAAQ,GAAG,EAAE,KAAK,CAAC,KAAK,QAAQ,IAAI,CAAC,CAAC,CAAC;YACtD,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,CAAC,QAAQ,GAAG,CAAC,KAAK,CAAC,KAAK,QAAQ,GAAG,EAAE,CAAC,CAAC;SACxD;QAED,OAAO,WAAW,CAAC;IACvB;;;;;;;;;;;"}

\ No newline at end of file

diff --git a/node_modules/base64-arraybuffer/dist/lib/index.js b/node_modules/@socket.io/base64-arraybuffer/dist/lib/index.js

similarity index 99%

rename from node_modules/base64-arraybuffer/dist/lib/index.js

rename to node_modules/@socket.io/base64-arraybuffer/dist/lib/index.js

index 79fa4b7..11c7d94 100644

--- a/node_modules/base64-arraybuffer/dist/lib/index.js

+++ b/node_modules/@socket.io/base64-arraybuffer/dist/lib/index.js

@@ -45,4 +45,4 @@

return arraybuffer;

};

exports.decode = decode;

-//# sourceMappingURL=index.js.map

\ No newline at end of file

+//# sourceMappingURL=index.js.map

diff --git a/node_modules/base64-arraybuffer/dist/lib/index.js.map b/node_modules/@socket.io/base64-arraybuffer/dist/lib/index.js.map

similarity index 100%

rename from node_modules/base64-arraybuffer/dist/lib/index.js.map

rename to node_modules/@socket.io/base64-arraybuffer/dist/lib/index.js.map

diff --git a/node_modules/base64-arraybuffer/dist/types/index.d.ts b/node_modules/@socket.io/base64-arraybuffer/dist/types/index.d.ts

similarity index 100%

rename from node_modules/base64-arraybuffer/dist/types/index.d.ts

rename to node_modules/@socket.io/base64-arraybuffer/dist/types/index.d.ts

diff --git a/node_modules/base64-arraybuffer/package.json b/node_modules/@socket.io/base64-arraybuffer/package.json

similarity index 81%

rename from node_modules/base64-arraybuffer/package.json

rename to node_modules/@socket.io/base64-arraybuffer/package.json

index 5671ca8..9de33b5 100644

--- a/node_modules/base64-arraybuffer/package.json

+++ b/node_modules/@socket.io/base64-arraybuffer/package.json

@@ -1,11 +1,11 @@

{

- "name": "base64-arraybuffer",

+ "name": "@socket.io/base64-arraybuffer",

"description": "Encode/decode base64 data into ArrayBuffers",

"main": "dist/base64-arraybuffer.umd.js",

"module": "dist/base64-arraybuffer.es5.js",

"typings": "dist/types/index.d.ts",

- "version": "1.0.1",

- "homepage": "https://github.com/niklasvh/base64-arraybuffer",

+ "version": "1.0.2",

+ "homepage": "https://github.com/socketio/base64-arraybuffer",

"author": {

"name": "Niklas von Hertzen",

"email": "niklasvh@gmail.com",

@@ -13,10 +13,10 @@

},

"repository": {

"type": "git",

- "url": "https://github.com/niklasvh/base64-arraybuffer"

+ "url": "https://github.com/socketio/base64-arraybuffer"

},

"bugs": {

- "url": "https://github.com/niklasvh/base64-arraybuffer/issues"

+ "url": "https://github.com/socketio/base64-arraybuffer/issues"

},

"license": "MIT",

"engines": {

@@ -50,5 +50,9 @@

"tslint-config-prettier": "^1.18.0",

"typescript": "^4.3.5"

},

- "keywords": []

+ "keywords": [],

+ "files": [

+ "src/",

+ "dist/"

+ ]

}

diff --git a/node_modules/@socket.io/base64-arraybuffer/src/index.ts b/node_modules/@socket.io/base64-arraybuffer/src/index.ts

new file mode 100644

index 0000000..2de9733

--- /dev/null

+++ b/node_modules/@socket.io/base64-arraybuffer/src/index.ts

@@ -0,0 +1,63 @@

+const chars = 'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/';

+

+// Use a lookup table to find the index.

+const lookup = typeof Uint8Array === 'undefined' ? [] : new Uint8Array(256);

+for (let i = 0; i < chars.length; i++) {

+ lookup[chars.charCodeAt(i)] = i;

+}

+

+export const encode = (arraybuffer: ArrayBuffer): string => {

+ let bytes = new Uint8Array(arraybuffer),

+ i,

+ len = bytes.length,

+ base64 = '';

+

+ for (i = 0; i < len; i += 3) {

+ base64 += chars[bytes[i] >> 2];

+ base64 += chars[((bytes[i] & 3) << 4) | (bytes[i + 1] >> 4)];

+ base64 += chars[((bytes[i + 1] & 15) << 2) | (bytes[i + 2] >> 6)];

+ base64 += chars[bytes[i + 2] & 63];

+ }

+

+ if (len % 3 === 2) {

+ base64 = base64.substring(0, base64.length - 1) + '=';

+ } else if (len % 3 === 1) {

+ base64 = base64.substring(0, base64.length - 2) + '==';

+ }

+

+ return base64;

+};

+

+export const decode = (base64: string): ArrayBuffer => {

+ let bufferLength = base64.length * 0.75,

+ len = base64.length,

+ i,

+ p = 0,

+ encoded1,

+ encoded2,

+ encoded3,

+ encoded4;

+

+ if (base64[base64.length - 1] === '=') {

+ bufferLength--;

+ if (base64[base64.length - 2] === '=') {

+ bufferLength--;

+ }

+ }

+

+ const arraybuffer = new ArrayBuffer(bufferLength),

+ bytes = new Uint8Array(arraybuffer);

+

+ for (i = 0; i < len; i += 4) {

+ encoded1 = lookup[base64.charCodeAt(i)];

+ encoded2 = lookup[base64.charCodeAt(i + 1)];

+ encoded3 = lookup[base64.charCodeAt(i + 2)];

+ encoded4 = lookup[base64.charCodeAt(i + 3)];

+

+ bytes[p++] = (encoded1 << 2) | (encoded2 >> 4);

+ bytes[p++] = ((encoded2 & 15) << 4) | (encoded3 >> 2);

+ bytes[p++] = ((encoded3 & 3) << 6) | (encoded4 & 63);

+ }

+

+ return arraybuffer;

+};

diff --git a/node_modules/@types/yauzl/LICENSE b/node_modules/@types/yauzl/LICENSE

new file mode 100644

index 0000000..2107107

--- /dev/null

+++ b/node_modules/@types/yauzl/LICENSE

@@ -0,0 +1,21 @@

+ MIT License

+

+ Copyright (c) Microsoft Corporation. All rights reserved.

+

+ Permission is hereby granted, free of charge, to any person obtaining a copy

+ of this software and associated documentation files (the "Software"), to deal

+ in the Software without restriction, including without limitation the rights

+ to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+ copies of the Software, and to permit persons to whom the Software is

+ furnished to do so, subject to the following conditions:

+

+ The above copyright notice and this permission notice shall be included in all

+ copies or substantial portions of the Software.

+

+ THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+ IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+ FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+ AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+ LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+ OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+ SOFTWARE

diff --git a/node_modules/@types/yauzl/README.md b/node_modules/@types/yauzl/README.md

new file mode 100644

index 0000000..f38f9c0

--- /dev/null

+++ b/node_modules/@types/yauzl/README.md

@@ -0,0 +1,16 @@

+# Installation

+> `npm install --save @types/yauzl`

+

+# Summary

+This package contains type definitions for yauzl (https://github.com/thejoshwolfe/yauzl).

+

+# Details

+Files were exported from https://github.com/DefinitelyTyped/DefinitelyTyped/tree/master/types/yauzl

+

+Additional Details

+ * Last updated: Wed, 02 Jan 2019 20:27:45 GMT

+ * Dependencies: @types/node

+ * Global values: none

+

+# Credits

+These definitions were written by Florian Keller <https://github.com/ffflorian>.

diff --git a/node_modules/@types/yauzl/index.d.ts b/node_modules/@types/yauzl/index.d.ts

new file mode 100644

index 0000000..11c1849

--- /dev/null

+++ b/node_modules/@types/yauzl/index.d.ts

@@ -0,0 +1,98 @@

+// Type definitions for yauzl 2.9

+// Project: https://github.com/thejoshwolfe/yauzl

+// Definitions by: Florian Keller <https://github.com/ffflorian>

+// Definitions: https://github.com/DefinitelyTyped/DefinitelyTyped

+

+/// <reference types="node" />

+

+import { EventEmitter } from 'events';

+import { Readable } from 'stream';

+

+export abstract class RandomAccessReader extends EventEmitter {

+ _readStreamForRange(start: number, end: number): void;

+ createReadStream(options: { start: number; end: number }): void;

+ read(buffer: Buffer, offset: number, length: number, position: number, callback: (err?: Error) => void): void;

+ close(callback: (err?: Error) => void): void;

+}

+

+export class Entry {

+ comment: string;

+ compressedSize: number;

+ compressionMethod: number;

+ crc32: number;

+ externalFileAttributes: number;

+ extraFieldLength: number;

+ extraFields: Array<{ id: number; data: Buffer }>;

+ fileCommentLength: number;

+ fileName: string;

+ fileNameLength: number;

+ generalPurposeBitFlag: number;

+ internalFileAttributes: number;

+ lastModFileDate: number;

+ lastModFileTime: number;

+ relativeOffsetOfLocalHeader: number;

+ uncompressedSize: number;

+ versionMadeBy: number;

+ versionNeededToExtract: number;

+

+ getLastModDate(): Date;

+ isEncrypted(): boolean;

+ isCompressed(): boolean;

+}

+

+export interface ZipFileOptions {

+ decompress: boolean | null;

+ decrypt: boolean | null;

+ start: number | null;

+ end: number | null;

+}

+

+export class ZipFile extends EventEmitter {

+ autoClose: boolean;

+ comment: string;

+ decodeStrings: boolean;

+ emittedError: boolean;

+ entriesRead: number;

+ entryCount: number;

+ fileSize: number;

+ isOpen: boolean;

+ lazyEntries: boolean;

+ readEntryCursor: boolean;

+ validateEntrySizes: boolean;

+

+ constructor(

+ reader: RandomAccessReader,

+ centralDirectoryOffset: number,

+ fileSize: number,

+ entryCount: number,

+ comment: string,

+ autoClose: boolean,

+ lazyEntries: boolean,

+ decodeStrings: boolean,

+ validateEntrySizes: boolean,

+ );

+

+ openReadStream(entry: Entry, options: ZipFileOptions, callback: (err?: Error, stream?: Readable) => void): void;

+ openReadStream(entry: Entry, callback: (err?: Error, stream?: Readable) => void): void;

+ close(): void;

+ readEntry(): void;

+}

+

+export interface Options {

+ autoClose?: boolean;

+ lazyEntries?: boolean;

+ decodeStrings?: boolean;

+ validateEntrySizes?: boolean;

+ strictFileNames?: boolean;

+}

+

+export function open(path: string, options: Options, callback?: (err?: Error, zipfile?: ZipFile) => void): void;

+export function open(path: string, callback?: (err?: Error, zipfile?: ZipFile) => void): void;

+export function fromFd(fd: number, options: Options, callback?: (err?: Error, zipfile?: ZipFile) => void): void;

+export function fromFd(fd: number, callback?: (err?: Error, zipfile?: ZipFile) => void): void;

+export function fromBuffer(buffer: Buffer, options: Options, callback?: (err?: Error, zipfile?: ZipFile) => void): void;

+export function fromBuffer(buffer: Buffer, callback?: (err?: Error, zipfile?: ZipFile) => void): void;

+export function fromRandomAccessReader(reader: RandomAccessReader, totalSize: number, options: Options, callback: (err?: Error, zipfile?: ZipFile) => void): void;

+export function fromRandomAccessReader(reader: RandomAccessReader, totalSize: number, callback: (err?: Error, zipfile?: ZipFile) => void): void;

+export function dosDateTimeToDate(date: number, time: number): Date;

+export function validateFileName(fileName: string): string | null;

diff --git a/node_modules/@types/yauzl/package.json b/node_modules/@types/yauzl/package.json

new file mode 100644

index 0000000..736b4c6

--- /dev/null

+++ b/node_modules/@types/yauzl/package.json

@@ -0,0 +1,25 @@

+{

+ "name": "@types/yauzl",

+ "version": "2.9.1",

+ "description": "TypeScript definitions for yauzl",

+ "license": "MIT",

+ "contributors": [

+ {

+ "name": "Florian Keller",

+ "url": "https://github.com/ffflorian",

+ "githubUsername": "ffflorian"

+ }

+ ],

+ "main": "",

+ "types": "index",

+ "repository": {

+ "type": "git",

+ "url": "https://github.com/DefinitelyTyped/DefinitelyTyped.git"

+ },

+ "scripts": {},

+ "dependencies": {

+ "@types/node": "*"

+ },

+ "typesPublisherContentHash": "78f765e4caa71766b61010d584b87ea4cf34e0bac10cac6b16d722d8a8456073",

+ "typeScriptVersion": "2.0"

+}

diff --git a/node_modules/base64-arraybuffer/CHANGELOG.md b/node_modules/base64-arraybuffer/CHANGELOG.md

deleted file mode 100644

index 4a304a0..0000000

--- a/node_modules/base64-arraybuffer/CHANGELOG.md

+++ /dev/null

@@ -1,19 +0,0 @@

-# Changelog

-

-All notable changes to this project will be documented in this file. See [standard-version](https://github.com/conventional-changelog/standard-version) for commit guidelines.

-

-## [1.0.1](https://github.com/niklasvh/base64-arraybuffer/compare/v1.0.0...v1.0.1) (2021-08-10)

-

-

-### fix

-

-* make lib loadable on ie9 (#30) ([a618d14](https://github.com/niklasvh/base64-arraybuffer/commit/a618d14d323f4eb230321a3609bfbc9f23f430c0)), closes [#30](https://github.com/niklasvh/base64-arraybuffer/issues/30)

-

-

-

-# [1.0.0](https://github.com/niklasvh/base64-arraybuffer/compare/v0.2.0...v1.0.0) (2021-08-10)

-

-

-### docs

-

-* update readme (#29) ([0a0253d](https://github.com/niklasvh/base64-arraybuffer/commit/0a0253dcc2e3f01a1f6d04fa81d578f714fce27f)), closes [#29](https://github.com/niklasvh/base64-arraybuffer/issues/29)

diff --git a/node_modules/base64-arraybuffer/dist/base64-arraybuffer.es5.js.map b/node_modules/base64-arraybuffer/dist/base64-arraybuffer.es5.js.map

deleted file mode 100644

index 142c152..0000000

--- a/node_modules/base64-arraybuffer/dist/base64-arraybuffer.es5.js.map

+++ /dev/null

@@ -1 +0,0 @@

-{"version":3,"file":"base64-arraybuffer.es5.js","sources":["../../src/index.ts"],"sourcesContent":[null],"names":[],"mappings":";;;;;AAAA,IAAM,KAAK,GAAG,kEAAkE,CAAC;AAEjF;AACA,IAAM,MAAM,GAAG,OAAO,UAAU,KAAK,WAAW,GAAG,EAAE,GAAG,IAAI,UAAU,CAAC,GAAG,CAAC,CAAC;AAC5E,KAAK,IAAI,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,KAAK,CAAC,MAAM,EAAE,CAAC,EAAE,EAAE;IACnC,MAAM,CAAC,KAAK,CAAC,UAAU,CAAC,CAAC,CAAC,CAAC,GAAG,CAAC,CAAC;CACnC;IAEY,MAAM,GAAG,UAAC,WAAwB;IAC3C,IAAI,KAAK,GAAG,IAAI,UAAU,CAAC,WAAW,CAAC,EACnC,CAAC,EACD,GAAG,GAAG,KAAK,CAAC,MAAM,EAClB,MAAM,GAAG,EAAE,CAAC;IAEhB,KAAK,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,GAAG,EAAE,CAAC,IAAI,CAAC,EAAE;QACzB,MAAM,IAAI,KAAK,CAAC,KAAK,CAAC,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC;QAC/B,MAAM,IAAI,KAAK,CAAC,CAAC,CAAC,KAAK,CAAC,CAAC,CAAC,GAAG,CAAC,KAAK,CAAC,KAAK,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC,CAAC;QAC7D,MAAM,IAAI,KAAK,CAAC,CAAC,CAAC,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,GAAG,EAAE,KAAK,CAAC,KAAK,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC,CAAC;QAClE,MAAM,IAAI,KAAK,CAAC,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,GAAG,EAAE,CAAC,CAAC;KACtC;IAED,IAAI,GAAG,GAAG,CAAC,KAAK,CAAC,EAAE;QACf,MAAM,GAAG,MAAM,CAAC,SAAS,CAAC,CAAC,EAAE,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,GAAG,GAAG,CAAC;KACzD;SAAM,IAAI,GAAG,GAAG,CAAC,KAAK,CAAC,EAAE;QACtB,MAAM,GAAG,MAAM,CAAC,SAAS,CAAC,CAAC,EAAE,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,GAAG,IAAI,CAAC;KAC1D;IAED,OAAO,MAAM,CAAC;AAClB,EAAE;IAEW,MAAM,GAAG,UAAC,MAAc;IACjC,IAAI,YAAY,GAAG,MAAM,CAAC,MAAM,GAAG,IAAI,EACnC,GAAG,GAAG,MAAM,CAAC,MAAM,EACnB,CAAC,EACD,CAAC,GAAG,CAAC,EACL,QAAQ,EACR,QAAQ,EACR,QAAQ,EACR,QAAQ,CAAC;IAEb,IAAI,MAAM,CAAC,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,KAAK,GAAG,EAAE;QACnC,YAAY,EAAE,CAAC;QACf,IAAI,MAAM,CAAC,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,KAAK,GAAG,EAAE;YACnC,YAAY,EAAE,CAAC;SAClB;KACJ;IAED,IAAM,WAAW,GAAG,IAAI,WAAW,CAAC,YAAY,CAAC,EAC7C,KAAK,GAAG,IAAI,UAAU,CAAC,WAAW,CAAC,CAAC;IAExC,KAAK,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,GAAG,EAAE,CAAC,IAAI,CAAC,EAAE;QACzB,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,CAAC,CAAC,CAAC;QACxC,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;QAC5C,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;QAC5C,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;QAE5C,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,QAAQ,IAAI,CAAC,KAAK,QAAQ,IAAI,CAAC,CAAC,CAAC;QAC/C,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,CAAC,QAAQ,GAAG,EAAE,KAAK,CAAC,KAAK,QAAQ,IAAI,CAAC,CAAC,CAAC;QACtD,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,CAAC,QAAQ,GAAG,CAAC,KAAK,CAAC,KAAK,QAAQ,GAAG,EAAE,CAAC,CAAC;KACxD;IAED,OAAO,WAAW,CAAC;AACvB;;;;"}

\ No newline at end of file

diff --git a/node_modules/base64-arraybuffer/dist/base64-arraybuffer.umd.js.map b/node_modules/base64-arraybuffer/dist/base64-arraybuffer.umd.js.map

deleted file mode 100644

index 1fafdef..0000000

--- a/node_modules/base64-arraybuffer/dist/base64-arraybuffer.umd.js.map

+++ /dev/null

@@ -1 +0,0 @@

-{"version":3,"file":"base64-arraybuffer.umd.js","sources":["../../src/index.ts"],"sourcesContent":[null],"names":[],"mappings":";;;;;;;;;;;IAAA,IAAM,KAAK,GAAG,kEAAkE,CAAC;IAEjF;IACA,IAAM,MAAM,GAAG,OAAO,UAAU,KAAK,WAAW,GAAG,EAAE,GAAG,IAAI,UAAU,CAAC,GAAG,CAAC,CAAC;IAC5E,KAAK,IAAI,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,KAAK,CAAC,MAAM,EAAE,CAAC,EAAE,EAAE;QACnC,MAAM,CAAC,KAAK,CAAC,UAAU,CAAC,CAAC,CAAC,CAAC,GAAG,CAAC,CAAC;KACnC;QAEY,MAAM,GAAG,UAAC,WAAwB;QAC3C,IAAI,KAAK,GAAG,IAAI,UAAU,CAAC,WAAW,CAAC,EACnC,CAAC,EACD,GAAG,GAAG,KAAK,CAAC,MAAM,EAClB,MAAM,GAAG,EAAE,CAAC;QAEhB,KAAK,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,GAAG,EAAE,CAAC,IAAI,CAAC,EAAE;YACzB,MAAM,IAAI,KAAK,CAAC,KAAK,CAAC,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC;YAC/B,MAAM,IAAI,KAAK,CAAC,CAAC,CAAC,KAAK,CAAC,CAAC,CAAC,GAAG,CAAC,KAAK,CAAC,KAAK,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC,CAAC;YAC7D,MAAM,IAAI,KAAK,CAAC,CAAC,CAAC,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,GAAG,EAAE,KAAK,CAAC,KAAK,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC,CAAC;YAClE,MAAM,IAAI,KAAK,CAAC,KAAK,CAAC,CAAC,GAAG,CAAC,CAAC,GAAG,EAAE,CAAC,CAAC;SACtC;QAED,IAAI,GAAG,GAAG,CAAC,KAAK,CAAC,EAAE;YACf,MAAM,GAAG,MAAM,CAAC,SAAS,CAAC,CAAC,EAAE,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,GAAG,GAAG,CAAC;SACzD;aAAM,IAAI,GAAG,GAAG,CAAC,KAAK,CAAC,EAAE;YACtB,MAAM,GAAG,MAAM,CAAC,SAAS,CAAC,CAAC,EAAE,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,GAAG,IAAI,CAAC;SAC1D;QAED,OAAO,MAAM,CAAC;IAClB,EAAE;QAEW,MAAM,GAAG,UAAC,MAAc;QACjC,IAAI,YAAY,GAAG,MAAM,CAAC,MAAM,GAAG,IAAI,EACnC,GAAG,GAAG,MAAM,CAAC,MAAM,EACnB,CAAC,EACD,CAAC,GAAG,CAAC,EACL,QAAQ,EACR,QAAQ,EACR,QAAQ,EACR,QAAQ,CAAC;QAEb,IAAI,MAAM,CAAC,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,KAAK,GAAG,EAAE;YACnC,YAAY,EAAE,CAAC;YACf,IAAI,MAAM,CAAC,MAAM,CAAC,MAAM,GAAG,CAAC,CAAC,KAAK,GAAG,EAAE;gBACnC,YAAY,EAAE,CAAC;aAClB;SACJ;QAED,IAAM,WAAW,GAAG,IAAI,WAAW,CAAC,YAAY,CAAC,EAC7C,KAAK,GAAG,IAAI,UAAU,CAAC,WAAW,CAAC,CAAC;QAExC,KAAK,CAAC,GAAG,CAAC,EAAE,CAAC,GAAG,GAAG,EAAE,CAAC,IAAI,CAAC,EAAE;YACzB,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,CAAC,CAAC,CAAC;YACxC,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;YAC5C,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;YAC5C,QAAQ,GAAG,MAAM,CAAC,MAAM,CAAC,UAAU,CAAC,CAAC,GAAG,CAAC,CAAC,CAAC,CAAC;YAE5C,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,QAAQ,IAAI,CAAC,KAAK,QAAQ,IAAI,CAAC,CAAC,CAAC;YAC/C,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,CAAC,QAAQ,GAAG,EAAE,KAAK,CAAC,KAAK,QAAQ,IAAI,CAAC,CAAC,CAAC;YACtD,KAAK,CAAC,CAAC,EAAE,CAAC,GAAG,CAAC,CAAC,QAAQ,GAAG,CAAC,KAAK,CAAC,KAAK,QAAQ,GAAG,EAAE,CAAC,CAAC;SACxD;QAED,OAAO,WAAW,CAAC;IACvB;;;;;;;;;;;"}

\ No newline at end of file

diff --git a/node_modules/base64-arraybuffer/rollup.config.ts b/node_modules/base64-arraybuffer/rollup.config.ts

deleted file mode 100644

index 4d44ff7..0000000

--- a/node_modules/base64-arraybuffer/rollup.config.ts

+++ /dev/null

@@ -1,40 +0,0 @@

-import resolve from '@rollup/plugin-node-resolve';

-import commonjs from '@rollup/plugin-commonjs';

-import sourceMaps from 'rollup-plugin-sourcemaps';

-import typescript from '@rollup/plugin-typescript';

-import json from 'rollup-plugin-json';

-

-const pkg = require('./package.json');

-

-const banner = `/*

- * ${pkg.name} ${pkg.version} <${pkg.homepage}>

- * Copyright (c) ${(new Date()).getFullYear()} ${pkg.author.name} <${pkg.author.url}>

- * Released under ${pkg.license} License

- */`;

-

-export default {

- input: `src/index.ts`,

- output: [

- { file: pkg.main, name: pkg.name, format: 'umd', banner, sourcemap: true },

- { file: pkg.module, format: 'esm', banner, sourcemap: true },

- ],

- external: [],

- watch: {

- include: 'src/**',

- },

- plugins: [

- // Allow node_modules resolution, so you can use 'external' to control

- // which external modules to include in the bundle

- // https://github.com/rollup/rollup-plugin-node-resolve#usage

- resolve(),

- // Allow json resolution

- json(),

- // Compile TypeScript files

- typescript(),

- // Allow bundling cjs modules (unlike webpack, rollup doesn't understand cjs)

- commonjs(),

-

- // Resolve source maps to the original source

- sourceMaps(),

- ],

-}

diff --git a/node_modules/date-format/.eslintrc b/node_modules/date-format/.eslintrc

deleted file mode 100644

index 54dbae8..0000000

--- a/node_modules/date-format/.eslintrc

+++ /dev/null

@@ -1,12 +0,0 @@

-{

- "extends": [

- "eslint:recommended"

- ],

- "env": {

- "node": true,

- "mocha": true

- },

- "plugins": [

- "mocha"

- ]

-}

diff --git a/node_modules/date-format/.travis.yml b/node_modules/date-format/.travis.yml

deleted file mode 100644

index 19b7cc8..0000000

--- a/node_modules/date-format/.travis.yml

+++ /dev/null

@@ -1,6 +0,0 @@

-language: node_js

-sudo: false

-node_js:

- - "10"

- - "8"

- - "6"

diff --git a/node_modules/date-format/CHANGELOG.md b/node_modules/date-format/CHANGELOG.md

new file mode 100644

index 0000000..8793a90

--- /dev/null

+++ b/node_modules/date-format/CHANGELOG.md

@@ -0,0 +1,65 @@

+# date-format Changelog

+

+## 4.0.7

+

+- [chore(dep): updated dependencies](https://github.com/nomiddlename/date-format/pull/57) - thanks [@peteriman](https://github.com/peteriman)

+ - chore(dev): bump eslint-plugin-mocha from 10.0.3 to 10.0.4

+ - updated package-lock.json

+- [chore(dep): updated dependencies](https://github.com/nomiddlename/date-format/pull/54) - thanks [@peteriman](https://github.com/peteriman)

+ - chore(dev): bump eslint from 8.11.0 to 8.13.0

+ - package-lock.json

+

+## 4.0.6

+

+- [chore(dep): updated dependencies](https://github.com/nomiddlename/date-format/pull/52) - thanks [@peteriman](https://github.com/peteriman)

+ - package-lock.json

+

+## 4.0.5

+

+- [chore(test): better test coverage instead of ignoring](https://github.com/nomiddlename/date-format/pull/48) - thanks [@peteriman](https://github.com/peteriman)

+- [chore(dep): updated dependencies](https://github.com/nomiddlename/date-format/pull/49) - thanks [@peteriman](https://github.com/peteriman)

+ - chore(dev): eslint from 8.10.0 to 8.11.0

+ - chore(dev): mocha from 9.2.1 to 9.2.2

+ - package-lock.json

+- [chore(docs): updated README.md with badges](https://github.com/nomiddlename/date-format/pull/50) thanks [@peteriman](https://github.com/peteriman)

+

+## 4.0.4

+

+- Updated dependencies - thanks [@peteriman](https://github.com/peteriman)

+ - [chore(dev): eslint from 8.8.0 to 8.10.0 and mocha from 9.2.0 to 9.2.1](https://github.com/nomiddlename/date-format/pull/46)

+ - [chore(dev): eslint from 8.7.0 to 8.8.0 and mocha from 9.1.4 to 9.2.0](https://github.com/nomiddlename/date-format/pull/45)

+ - [package-lock.json](https://github.com/nomiddlename/date-format/pull/44)

+

+## 4.0.3

+

+- [100% test coverage](https://github.com/nomiddlename/date-format/pull/42) - thanks [@peteriman](https://github.com/peteriman)

+- Updated dependencies - thanks [@peteriman](https://github.com/peteriman)

+ - [chore(dev): eslint from 8.6.0 to 8.7.0 and mocha from 9.1.3 to 9.1.4](https://github.com/nomiddlename/date-format/pull/41)

+

+## 4.0.2

+

+- [Not to publish misc files to NPM](https://github.com/nomiddlename/date-format/pull/39) - thanks [@peteriman](https://github.com/peteriman)

+- CHANGELOG.md

+ - [Removed "log4js" from title of CHANGELOG.md](https://github.com/nomiddlename/date-format/pull/37) - thanks [@joshuabremerdexcom](https://github.com/joshuabremerdexcom)

+ - [Added "date-format" to title of CHANGELOG.md](https://github.com/nomiddlename/date-format/commit/64a95d0386853692d7d65174f94a0751e775f7ce#diff-06572a96a58dc510037d5efa622f9bec8519bc1beab13c9f251e97e657a9d4ed) - thanks [@peteriman](https://github.com/peteriman)

+- Updated dependencies - thanks [@peteriman](https://github.com/peteriman)

+ - [chore(dev): eslint-plugin-mocha from 5.3.0 to 10.0.3](https://github.com/nomiddlename/date-format/pull/38)

+

+## 4.0.1

+

+- is exactly the same as 4.0.0 and is a re-published 4.0.0 to npm

+

+## 4.0.0

+

+- [Fix timezone format to include colon separator](https://github.com/nomiddlename/date-format/pull/27) - thanks [@peteriman](https://github.com/peteriman)

+ - [test: have a test case for timezone with colon](https://github.com/nomiddlename/date-format/pull/32) - thanks [@peteriman](https://github.com/peteriman)

+- [Docs: Updated README.md with more examples and expected output](https://github.com/nomiddlename/date-format/pull/33) - thanks [@peteriman](https://github.com/peteriman)

+- Updated dependencies

+ - [should-util from 1.0.0 to 1.0.1](https://github.com/nomiddlename/date-format/pull/31) - thanks [@peteriman](https://github.com/peteriman)

+ - [chore(dev): eslint from 5.16.0 to 8.6.0 and mocha from 5.2.0 to 9.1.3](https://github.com/nomiddlename/date-format/pull/30) - thanks [@peteriman](https://github.com/peteriman)

+ - [acorn from 6.2.0 to 6.4.2](https://github.com/nomiddlename/date-format/pull/29) - thanks [@Dependabot](https://github.com/dependabot)

+ - [lodash from 4.17.14 to 4.17.21](https://github.com/nomiddlename/date-format/pull/26) - thanks [@Dependabot](https://github.com/dependabot)

+

+## Previous versions

+

+Change information for older versions can be found by looking at the milestones in github.

diff --git a/node_modules/date-format/README.md b/node_modules/date-format/README.md

index cca25e0..3eee99f 100644

--- a/node_modules/date-format/README.md

+++ b/node_modules/date-format/README.md

@@ -1,6 +1,8 @@

-date-format

+date-format [](https://github.com/nomiddlename/date-format/actions/workflows/codeql-analysis.yml) [](https://github.com/nomiddlename/date-format/actions/workflows/node.js.yml)

===========

+[](https://nodei.co/npm/date-format/)

+

node.js formatting of Date objects as strings. Probably exactly the same as some other library out there.

```sh

@@ -15,18 +17,28 @@

```javascript

var format = require('date-format');

-format.asString(); //defaults to ISO8601 format and current date.

-format.asString(new Date()); //defaults to ISO8601 format

-format.asString('hh:mm:ss.SSS', new Date()); //just the time

+format.asString(); // defaults to ISO8601 format and current date

+format.asString(new Date()); // defaults to ISO8601 format

+format.asString('hh:mm:ss.SSS', new Date()); // just the time

+format.asString(format.ISO8601_WITH_TZ_OFFSET_FORMAT, new Date()); // in ISO8601 with timezone

```

or

```javascript

var format = require('date-format');

-format(); //defaults to ISO8601 format and current date.

-format(new Date());

-format('hh:mm:ss.SSS', new Date());

+format(); // defaults to ISO8601 format and current date

+format(new Date()); // defaults to ISO8601 format

+format('hh:mm:ss.SSS', new Date()); // just the time

+format(format.ISO8601_WITH_TZ_OFFSET_FORMAT, new Date()); // in ISO8601 with timezone

+```

+

+**output:**

+```javascript

+2017-03-14T14:10:20.391

+2017-03-14T14:10:20.391

+14:10:20.391

+2017-03-14T14:10:20.391+11:00

```

Format string can be anything, but the following letters will be replaced (and leading zeroes added if necessary):

@@ -38,11 +50,11 @@

* mm - `date.getMinutes()`

* ss - `date.getSeconds()`

* SSS - `date.getMilliseconds()`

-* O - timezone offset in +hm format (note that time will be in UTC if displaying offset)

+* O - timezone offset in ±hh:mm format (note that time will still be local if displaying offset)

Built-in formats:

* `format.ISO8601_FORMAT` - `2017-03-14T14:10:20.391` (local time used)

-* `format.ISO8601_WITH_TZ_OFFSET_FORMAT` - `2017-03-14T03:10:20.391+1100` (UTC + TZ used)

+* `format.ISO8601_WITH_TZ_OFFSET_FORMAT` - `2017-03-14T14:10:20.391+11:00` (local + TZ used)

* `format.DATETIME_FORMAT` - `14 03 2017 14:10:20.391` (local time used)

* `format.ABSOLUTETIME_FORMAT` - `14:10:20.391` (local time used)

@@ -54,5 +66,8 @@

var format = require('date-format');

// pass in the format of the string as first argument

format.parse(format.ISO8601_FORMAT, '2017-03-14T14:10:20.391');

+format.parse(format.ISO8601_WITH_TZ_OFFSET_FORMAT, '2017-03-14T14:10:20.391+1100');

+format.parse(format.ISO8601_WITH_TZ_OFFSET_FORMAT, '2017-03-14T14:10:20.391+11:00');

+format.parse(format.ISO8601_WITH_TZ_OFFSET_FORMAT, '2017-03-14T03:10:20.391Z');

// returns Date

```

diff --git a/node_modules/date-format/lib/index.js b/node_modules/date-format/lib/index.js

index 9f8de68..15ec55a 100644

--- a/node_modules/date-format/lib/index.js

+++ b/node_modules/date-format/lib/index.js

@@ -21,13 +21,9 @@

var os = Math.abs(timezoneOffset);

var h = String(Math.floor(os / 60));

var m = String(os % 60);

- if (h.length === 1) {

- h = "0" + h;

- }

- if (m.length === 1) {

- m = "0" + m;

- }

- return timezoneOffset < 0 ? "+" + h + m : "-" + h + m;

+ h = ("0" + h).slice(-2);

+ m = ("0" + m).slice(-2);

+ return timezoneOffset === 0 ? "Z" : (timezoneOffset < 0 ? "+" : "-") + h + ":" + m;

}

function asString(format, date) {

@@ -126,11 +122,14 @@

},

{

pattern: /O/,

- regexp: "[+-]\\d{3,4}|Z",

+ regexp: "[+-]\\d{1,2}:?\\d{2}?|Z",

fn: function(date, value) {

if (value === "Z") {

value = 0;

}

+ else {

+ value = value.replace(":", "");

+ }

var offset = Math.abs(value);

var timezoneOffset = (value > 0 ? -1 : 1 ) * ((offset % 100) + Math.floor(offset / 100) * 60);

// Per ISO8601 standard: UTC = local time - offset

diff --git a/node_modules/date-format/package.json b/node_modules/date-format/package.json

index 4a527a2..6af0173 100644

--- a/node_modules/date-format/package.json

+++ b/node_modules/date-format/package.json

@@ -1,8 +1,12 @@

{

"name": "date-format",

- "version": "3.0.0",

+ "version": "4.0.7",

"description": "Formatting Date objects as strings since 2013",

"main": "lib/index.js",

+ "files": [

+ "lib",

+ "CHANGELOG.md"

+ ],

"repository": {

"type": "git",

"url": "https://github.com/nomiddlename/date-format.git"

@@ -13,7 +17,7 @@

"scripts": {

"lint": "eslint lib/* test/*",

"pretest": "eslint lib/* test/*",

- "test": "mocha"

+ "test": "nyc --check-coverage mocha"

},

"keywords": [

"date",

@@ -25,9 +29,18 @@

"readmeFilename": "README.md",

"gitHead": "bf59015ab6c9e86454b179374f29debbdb403522",

"devDependencies": {

- "eslint": "^5.16.0",

- "eslint-plugin-mocha": "^5.3.0",

- "mocha": "^5.2.0",

+ "eslint": "^8.13.0",

+ "eslint-plugin-mocha": "^10.0.4",

+ "mocha": "^9.2.2",

+ "nyc": "^15.1.0",

"should": "^13.2.3"

+ },

+ "nyc": {

+ "include": [

+ "lib/**"

+ ],

+ "branches": 100,

+ "lines": 100,

+ "functions": 100

}

}

diff --git a/node_modules/date-format/test/date_format-test.js b/node_modules/date-format/test/date_format-test.js

deleted file mode 100644

index 9bb228a..0000000

--- a/node_modules/date-format/test/date_format-test.js

+++ /dev/null

@@ -1,61 +0,0 @@

-'use strict';

-

-require('should');

-

-var dateFormat = require('../lib');

-

-function createFixedDate() {

- return new Date(2010, 0, 11, 14, 31, 30, 5);

-}

-

-describe('date_format', function() {

- var date = createFixedDate();

-

- it('should default to now when a date is not provided', function() {

- dateFormat.asString(dateFormat.DATETIME_FORMAT).should.not.be.empty();

- });

-

- it('should be usable directly without calling asString', function() {

- dateFormat(dateFormat.DATETIME_FORMAT, date).should.eql('11 01 2010 14:31:30.005');

- });

-

- it('should format a date as string using a pattern', function() {

- dateFormat.asString(dateFormat.DATETIME_FORMAT, date).should.eql('11 01 2010 14:31:30.005');

- });

-

- it('should default to the ISO8601 format', function() {

- dateFormat.asString(date).should.eql('2010-01-11T14:31:30.005');

- });

-

- it('should provide a ISO8601 with timezone offset format', function() {

- var tzDate = createFixedDate();

- tzDate.getTimezoneOffset = function () {

- return -660;

- };

-

- // when tz offset is in the pattern, the date should be in local time

- dateFormat.asString(dateFormat.ISO8601_WITH_TZ_OFFSET_FORMAT, tzDate)

- .should.eql('2010-01-11T14:31:30.005+1100');

-

- tzDate = createFixedDate();

- tzDate.getTimezoneOffset = function () {

- return 120;

- };

-

- dateFormat.asString(dateFormat.ISO8601_WITH_TZ_OFFSET_FORMAT, tzDate)

- .should.eql('2010-01-11T14:31:30.005-0200');

- });

-

- it('should provide a just-the-time format', function() {

- dateFormat.asString(dateFormat.ABSOLUTETIME_FORMAT, date).should.eql('14:31:30.005');

- });

-

- it('should provide a custom format', function() {

- var customDate = createFixedDate();

- customDate.getTimezoneOffset = function () {

- return 120;

- };

-

- dateFormat.asString('O.SSS.ss.mm.hh.dd.MM.yy', customDate).should.eql('-0200.005.30.31.14.11.01.10');

- });

-});

diff --git a/node_modules/date-format/test/parse-test.js b/node_modules/date-format/test/parse-test.js

deleted file mode 100644

index 9f72982..0000000

--- a/node_modules/date-format/test/parse-test.js

+++ /dev/null

@@ -1,220 +0,0 @@

-"use strict";

-

-require("should");

-var dateFormat = require("../lib");

-

-describe("dateFormat.parse", function() {

- it("should require a pattern", function() {

- (function() {

- dateFormat.parse();

- }.should.throw(/pattern must be supplied/));

- (function() {

- dateFormat.parse(null);

- }.should.throw(/pattern must be supplied/));

- (function() {

- dateFormat.parse("");

- }.should.throw(/pattern must be supplied/));

- });

-

- describe("with a pattern that has no replacements", function() {

- it("should return a new date when the string matches", function() {

- dateFormat.parse("cheese", "cheese").should.be.a.Date();

- });

-

- it("should throw if the string does not match", function() {

- (function() {

- dateFormat.parse("cheese", "biscuits");

- }.should.throw(/String 'biscuits' could not be parsed as 'cheese'/));

- });

- });

-

- describe("with a full pattern", function() {

- var pattern = "yyyy-MM-dd hh:mm:ss.SSSO";

-

- it("should return the correct date if the string matches", function() {

- var testDate = new Date();

- testDate.setUTCFullYear(2018);

- testDate.setUTCMonth(8);

- testDate.setUTCDate(13);

- testDate.setUTCHours(18);

- testDate.setUTCMinutes(10);

- testDate.setUTCSeconds(12);

- testDate.setUTCMilliseconds(392);

-

- dateFormat

- .parse(pattern, "2018-09-14 04:10:12.392+1000")

- .getTime()

- .should.eql(testDate.getTime())

- ;

- });

-

- it("should throw if the string does not match", function() {

- (function() {

- dateFormat.parse(pattern, "biscuits");

- }.should.throw(

- /String 'biscuits' could not be parsed as 'yyyy-MM-dd hh:mm:ss.SSSO'/

- ));

- });

- });

-

- describe("with a partial pattern", function() {

- var testDate = new Date();

- dateFormat.now = function() {

- return testDate;

- };

-

- /**

- * If there's no timezone in the format, then we verify against the local date

- */

- function verifyLocalDate(actual, expected) {

- actual.getFullYear().should.eql(expected.year || testDate.getFullYear());

- actual.getMonth().should.eql(expected.month || testDate.getMonth());

- actual.getDate().should.eql(expected.day || testDate.getDate());

- actual.getHours().should.eql(expected.hours || testDate.getHours());

- actual.getMinutes().should.eql(expected.minutes || testDate.getMinutes());

- actual.getSeconds().should.eql(expected.seconds || testDate.getSeconds());

- actual

- .getMilliseconds()

- .should.eql(expected.milliseconds || testDate.getMilliseconds());

- }

-

- /**

- * If a timezone is specified, let's verify against the UTC time it is supposed to be

- */

- function verifyDate(actual, expected) {

- actual.getUTCFullYear().should.eql(expected.year || testDate.getUTCFullYear());

- actual.getUTCMonth().should.eql(expected.month || testDate.getUTCMonth());

- actual.getUTCDate().should.eql(expected.day || testDate.getUTCDate());

- actual.getUTCHours().should.eql(expected.hours || testDate.getUTCHours());

- actual.getUTCMinutes().should.eql(expected.minutes || testDate.getUTCMinutes());

- actual.getUTCSeconds().should.eql(expected.seconds || testDate.getUTCSeconds());

- actual

- .getMilliseconds()

- .should.eql(expected.milliseconds || testDate.getMilliseconds());

- }

-

- it("should return a date with missing values defaulting to current time", function() {

- var date = dateFormat.parse("yyyy-MM", "2015-09");

- verifyLocalDate(date, { year: 2015, month: 8 });

- });

-

- it("should use a passed in date for missing values", function() {

- var missingValueDate = new Date(2010, 1, 8, 22, 30, 12, 100);

- var date = dateFormat.parse("yyyy-MM", "2015-09", missingValueDate);

- verifyLocalDate(date, {

- year: 2015,

- month: 8,

- day: 8,

- hours: 22,

- minutes: 30,

- seconds: 12,

- milliseconds: 100

- });

- });

-

- it("should handle variations on the same pattern", function() {

- var date = dateFormat.parse("MM-yyyy", "09-2015");

- verifyLocalDate(date, { year: 2015, month: 8 });

-

- date = dateFormat.parse("yyyy MM", "2015 09");

- verifyLocalDate(date, { year: 2015, month: 8 });

-

- date = dateFormat.parse("MM, yyyy.", "09, 2015.");

- verifyLocalDate(date, { year: 2015, month: 8 });

- });

-

- describe("should match all the date parts", function() {

- it("works with dd", function() {

- var date = dateFormat.parse("dd", "21");

- verifyLocalDate(date, { day: 21 });

- });

-

- it("works with hh", function() {

- var date = dateFormat.parse("hh", "12");

- verifyLocalDate(date, { hours: 12 });

- });

-

- it("works with mm", function() {

- var date = dateFormat.parse("mm", "34");

- verifyLocalDate(date, { minutes: 34 });

- });

-

- it("works with ss", function() {

- var date = dateFormat.parse("ss", "59");

- verifyLocalDate(date, { seconds: 59 });

- });

-

- it("works with ss.SSS", function() {

- var date = dateFormat.parse("ss.SSS", "23.452");

- verifyLocalDate(date, { seconds: 23, milliseconds: 452 });

- });

-

- it("works with hh:mm O (+1000)", function() {

- var date = dateFormat.parse("hh:mm O", "05:23 +1000");

- verifyDate(date, { hours: 19, minutes: 23 });

- });

-

- it("works with hh:mm O (-200)", function() {

- var date = dateFormat.parse("hh:mm O", "05:23 -200");

- verifyDate(date, { hours: 7, minutes: 23 });

- });

-

- it("works with hh:mm O (+0930)", function() {

- var date = dateFormat.parse("hh:mm O", "05:23 +0930");

- verifyDate(date, { hours: 19, minutes: 53 });

- });

- });

- });

-

- describe("with a date formatted by this library", function() {

- describe("should format and then parse back to the same date", function() {

- function testDateInitWithUTC() {

- var td = new Date();

- td.setUTCFullYear(2018);

- td.setUTCMonth(8);

- td.setUTCDate(13);

- td.setUTCHours(18);

- td.setUTCMinutes(10);

- td.setUTCSeconds(12);

- td.setUTCMilliseconds(392);

- return td;

- }

-

- it("works with ISO8601_WITH_TZ_OFFSET_FORMAT", function() {

- // For this test case to work, the date object must be initialized with

- // UTC timezone

- var td = testDateInitWithUTC();

- var d = dateFormat(dateFormat.ISO8601_WITH_TZ_OFFSET_FORMAT, td);

- dateFormat.parse(dateFormat.ISO8601_WITH_TZ_OFFSET_FORMAT, d)

- .should.eql(td);

- });

-

- it("works with ISO8601_FORMAT", function() {

- var td = new Date();

- var d = dateFormat(dateFormat.ISO8601_FORMAT, td);

- var actual = dateFormat.parse(dateFormat.ISO8601_FORMAT, d);

- actual.should.eql(td);

- });

-

- it("works with DATETIME_FORMAT", function() {

- var testDate = new Date();

- dateFormat

- .parse(

- dateFormat.DATETIME_FORMAT,

- dateFormat(dateFormat.DATETIME_FORMAT, testDate)

- )

- .should.eql(testDate);

- });

-

- it("works with ABSOLUTETIME_FORMAT", function() {

- var testDate = new Date();

- dateFormat

- .parse(

- dateFormat.ABSOLUTETIME_FORMAT,

- dateFormat(dateFormat.ABSOLUTETIME_FORMAT, testDate)

- )

- .should.eql(testDate);

- });

- });

- });

-});

diff --git a/node_modules/engine.io-parser/CHANGELOG.md b/node_modules/engine.io-parser/CHANGELOG.md

deleted file mode 100644

index 63421c7..0000000

--- a/node_modules/engine.io-parser/CHANGELOG.md

+++ /dev/null

@@ -1,133 +0,0 @@

-## [5.0.2](https://github.com/socketio/engine.io-parser/compare/5.0.1...5.0.2) (2021-11-14)

-

-

-### Bug Fixes

-

-* add package name in nested package.json ([7e27159](https://github.com/socketio/engine.io-parser/commit/7e271596c3305fb4e4a9fbdcc7fd442e8ff71200))

-* fix vite build for CommonJS users ([5f22ed0](https://github.com/socketio/engine.io-parser/commit/5f22ed0527cc80aa0cac415dfd12db2f94f0a855))

-

-

-

-## [5.0.1](https://github.com/socketio/engine.io-parser/compare/5.0.0...5.0.1) (2021-10-15)

-

-

-### Bug Fixes

-

-* fix vite build ([900346e](https://github.com/socketio/engine.io-parser/commit/900346ea34ddc178d80eaabc8ea516d929457855))

-

-

-

-# [5.0.0](https://github.com/socketio/engine.io-parser/compare/4.0.3...5.0.0) (2021-10-04)

-

-This release includes the migration to TypeScript. The major bump is due to the new "exports" field in the package.json file.

-

-See also: https://nodejs.org/api/packages.html#packages_package_entry_points

-

-## [4.0.3](https://github.com/socketio/engine.io-parser/compare/4.0.2...4.0.3) (2021-08-29)

-

-

-### Bug Fixes

-

-* respect the offset and length of TypedArray objects ([6d7dd76](https://github.com/socketio/engine.io-parser/commit/6d7dd76130690afda6c214d5c04305d2bbc4eb4d))

-

-

-## [4.0.2](https://github.com/socketio/engine.io-parser/compare/4.0.1...4.0.2) (2020-12-07)

-

-

-### Bug Fixes

-

-* add base64-arraybuffer as prod dependency ([2ccdeb2](https://github.com/socketio/engine.io-parser/commit/2ccdeb277955bed8742a29f2dcbbf57ca95eb12a))

-

-

-## [2.2.1](https://github.com/socketio/engine.io-parser/compare/2.2.0...2.2.1) (2020-09-30)

-

-

-## [4.0.1](https://github.com/socketio/engine.io-parser/compare/4.0.0...4.0.1) (2020-09-10)

-

-

-### Bug Fixes

-

-* use a terser-compatible representation of the separator ([886f9ea](https://github.com/socketio/engine.io-parser/commit/886f9ea7c4e717573152c31320f6fb6c6664061b))

-

-

-# [4.0.0](https://github.com/socketio/engine.io-parser/compare/v4.0.0-alpha.1...4.0.0) (2020-09-08)

-

-This major release contains the necessary changes for the version 4 of the Engine.IO protocol. More information about the new version can be found [there](https://github.com/socketio/engine.io-protocol#difference-between-v3-and-v4).

-

-Encoding changes between v3 and v4:

-

-- encodePacket with string

- - input: `{ type: "message", data: "hello" }`

- - output in v3: `"4hello"`

- - output in v4: `"4hello"`

-

-- encodePacket with binary

- - input: `{ type: 'message', data: <Buffer 01 02 03> }`

- - output in v3: `<Buffer 04 01 02 03>`

- - output in v4: `<Buffer 01 02 03>`

-

-- encodePayload with strings

- - input: `[ { type: 'message', data: 'hello' }, { type: 'message', data: '€€€' } ]`

- - output in v3: `"6:4hello4:4€€€"`

- - output in v4: `"4hello\x1e4€€€"`

-

-- encodePayload with string and binary

- - input: `[ { type: 'message', data: 'hello' }, { type: 'message', data: <Buffer 01 02 03> } ]`

- - output in v3: `<Buffer 00 06 ff 34 68 65 6c 6c 6f 01 04 ff 04 01 02 03>`

- - output in v4: `"4hello\x1ebAQID"`

-

-Please note that the parser is now dependency-free! This should help reduce the size of the browser bundle.

-

-### Bug Fixes

-

-* keep track of the buffer initial length ([8edf2d1](https://github.com/socketio/engine.io-parser/commit/8edf2d1478026da442f519c2d2521af43ba01832))

-

-

-### Features

-

-* restore the upgrade mechanism ([6efedfa](https://github.com/socketio/engine.io-parser/commit/6efedfa0f3048506a4ba99e70674ddf4c0732e0c))

-

-

-

-# [4.0.0-alpha.1](https://github.com/socketio/engine.io-parser/compare/v4.0.0-alpha.0...v4.0.0-alpha.1) (2020-05-19)

-

-

-### Features

-

-* implement the version 4 of the protocol ([cab7db0](https://github.com/socketio/engine.io-parser/commit/cab7db0404e0a69f86a05ececd62c8c31f4d97d5))

-

-

-

-# [4.0.0-alpha.0](https://github.com/socketio/engine.io-parser/compare/2.2.0...v4.0.0-alpha.0) (2020-02-04)

-

-

-### Bug Fixes

-

-* properly decode binary packets ([5085373](https://github.com/socketio/engine.io-parser/commit/50853738e0c6c16f9cee0d7887651155f4b78240))

-

-

-### Features

-

-* remove packet type when encoding binary packets ([a947ae5](https://github.com/socketio/engine.io-parser/commit/a947ae59a2844e4041db58ff36b270d1528b3bee))

-

-

-### BREAKING CHANGES

-

-* the packet containing binary data will now be sent without any transformation

-

-Protocol v3: { type: 'message', data: <Buffer 01 02 03> } => <Buffer 04 01 02 03>

-Protocol v4: { type: 'message', data: <Buffer 01 02 03> } => <Buffer 01 02 03>

-

-

-

-# [2.2.0](https://github.com/socketio/engine.io-parser/compare/2.1.3...2.2.0) (2019-09-13)

-

-

-* [refactor] Use `Buffer.allocUnsafe` instead of `new Buffer` (#104) ([aedf8eb](https://github.com/socketio/engine.io-parser/commit/aedf8eb29e8bf6aeb5c6cc68965d986c4c958ae2)), closes [#104](https://github.com/socketio/engine.io-parser/issues/104)

-

-

-### BREAKING CHANGES

-

-* drop support for Node.js 4 (since Buffer.allocUnsafe was added in v5.10.0)

-

-Reference: https://nodejs.org/docs/latest/api/buffer.html#buffer_class_method_buffer_allocunsafe_size

diff --git a/node_modules/engine.io-parser/build/cjs/decodePacket.browser.js b/node_modules/engine.io-parser/build/cjs/decodePacket.browser.js

index 9246825..e85a136 100644

--- a/node_modules/engine.io-parser/build/cjs/decodePacket.browser.js

+++ b/node_modules/engine.io-parser/build/cjs/decodePacket.browser.js

@@ -1,7 +1,7 @@

"use strict";

Object.defineProperty(exports, "__esModule", { value: true });

const commons_js_1 = require("./commons.js");

-const base64_arraybuffer_1 = require("base64-arraybuffer");

+const base64_arraybuffer_1 = require("@socket.io/base64-arraybuffer");

const withNativeArrayBuffer = typeof ArrayBuffer === "function";

const decodePacket = (encodedPacket, binaryType) => {

if (typeof encodedPacket !== "string") {

diff --git a/node_modules/engine.io-parser/build/cjs/decodePacket.js b/node_modules/engine.io-parser/build/cjs/decodePacket.js

index 6d62ba9..2dbe0f8 100644

--- a/node_modules/engine.io-parser/build/cjs/decodePacket.js

+++ b/node_modules/engine.io-parser/build/cjs/decodePacket.js

@@ -38,7 +38,7 @@

return data; // assuming the data is already a Buffer

}

};

-const toArrayBuffer = buffer => {

+const toArrayBuffer = (buffer) => {

const arrayBuffer = new ArrayBuffer(buffer.length);

const view = new Uint8Array(arrayBuffer);

for (let i = 0; i < buffer.length; i++) {

diff --git a/node_modules/engine.io-parser/build/esm/commons.d.ts b/node_modules/engine.io-parser/build/esm/commons.d.ts

index 9c3bde1..b0b0941 100644

--- a/node_modules/engine.io-parser/build/esm/commons.d.ts

+++ b/node_modules/engine.io-parser/build/esm/commons.d.ts

@@ -1,7 +1,15 @@

+/// <reference types="node" />

declare const PACKET_TYPES: any;

declare const PACKET_TYPES_REVERSE: any;

-declare const ERROR_PACKET: {

- type: string;

- data: string;

-};

+declare const ERROR_PACKET: Packet;

export { PACKET_TYPES, PACKET_TYPES_REVERSE, ERROR_PACKET };

+export declare type PacketType = "open" | "close" | "ping" | "pong" | "message" | "upgrade" | "noop" | "error";

+export declare type RawData = string | Buffer | ArrayBuffer | ArrayBufferView | Blob;

+export interface Packet {

+ type: PacketType;

+ options?: {

+ compress: boolean;

+ };

+ data?: RawData;

+}

+export declare type BinaryType = "nodebuffer" | "arraybuffer" | "blob";

diff --git a/node_modules/engine.io-parser/build/esm/decodePacket.browser.d.ts b/node_modules/engine.io-parser/build/esm/decodePacket.browser.d.ts

index 8c928e1..e4045d6 100644

--- a/node_modules/engine.io-parser/build/esm/decodePacket.browser.d.ts

+++ b/node_modules/engine.io-parser/build/esm/decodePacket.browser.d.ts

@@ -1,11 +1,3 @@

-declare const decodePacket: (encodedPacket: any, binaryType: any) => {

- type: string;

- data: any;

-} | {

- type: any;

- data: string;

-} | {

- type: any;

- data?: undefined;

-};

+import { Packet, BinaryType, RawData } from "./commons.js";

+declare const decodePacket: (encodedPacket: RawData, binaryType?: BinaryType) => Packet;

export default decodePacket;

diff --git a/node_modules/engine.io-parser/build/esm/decodePacket.browser.js b/node_modules/engine.io-parser/build/esm/decodePacket.browser.js

index a4c3f64..b1dae76 100644

--- a/node_modules/engine.io-parser/build/esm/decodePacket.browser.js

+++ b/node_modules/engine.io-parser/build/esm/decodePacket.browser.js

@@ -1,5 +1,5 @@

import { ERROR_PACKET, PACKET_TYPES_REVERSE } from "./commons.js";

-import { decode } from "base64-arraybuffer";

+import { decode } from "@socket.io/base64-arraybuffer";

const withNativeArrayBuffer = typeof ArrayBuffer === "function";

const decodePacket = (encodedPacket, binaryType) => {

if (typeof encodedPacket !== "string") {

diff --git a/node_modules/engine.io-parser/build/esm/decodePacket.d.ts b/node_modules/engine.io-parser/build/esm/decodePacket.d.ts

index 9f5ec24..e4045d6 100644

--- a/node_modules/engine.io-parser/build/esm/decodePacket.d.ts

+++ b/node_modules/engine.io-parser/build/esm/decodePacket.d.ts

@@ -1,11 +1,3 @@

-declare const decodePacket: (encodedPacket: any, binaryType?: any) => {

- type: string;

- data: any;

-} | {

- type: any;

- data: string;

-} | {

- type: any;

- data?: undefined;

-};

+import { Packet, BinaryType, RawData } from "./commons.js";

+declare const decodePacket: (encodedPacket: RawData, binaryType?: BinaryType) => Packet;

export default decodePacket;

diff --git a/node_modules/engine.io-parser/build/esm/decodePacket.js b/node_modules/engine.io-parser/build/esm/decodePacket.js

index cf976fd..58ca8eb 100644

--- a/node_modules/engine.io-parser/build/esm/decodePacket.js

+++ b/node_modules/engine.io-parser/build/esm/decodePacket.js

@@ -36,7 +36,7 @@